Security hubris. It’s the phrase we use to refer to our feeling of confidence grounded on assumptions we all have (but may not be aware of or care to admit) about cybersecurity—and, at times, privacy.

It rears its ugly head when (1) we share the common notion that programmers know how to code securely; (2) we cherry-pick perceived-as-easier security and privacy practices over difficult and cumbersome ones, thinking that will be enough to keep our data secure; and (3) we find ourselves signing up to services owned by big-named institutions, believing that—given their strong branding, influence, and seemingly infinite resources—they are securing the privacy of their users’ data by default.

Point three, in particular, applies to how we perceive official mobile apps of financial institutions: We believe they are inherently secure. In a study called “In Plain Sight: The Vulnerability Epidemic in Financial Mobile Apps” [PDF], application security company Arxan Technologies looked to see if this perception is founded. Alas, what they found proved that it is not.

Understanding mobile app vulnerabilities

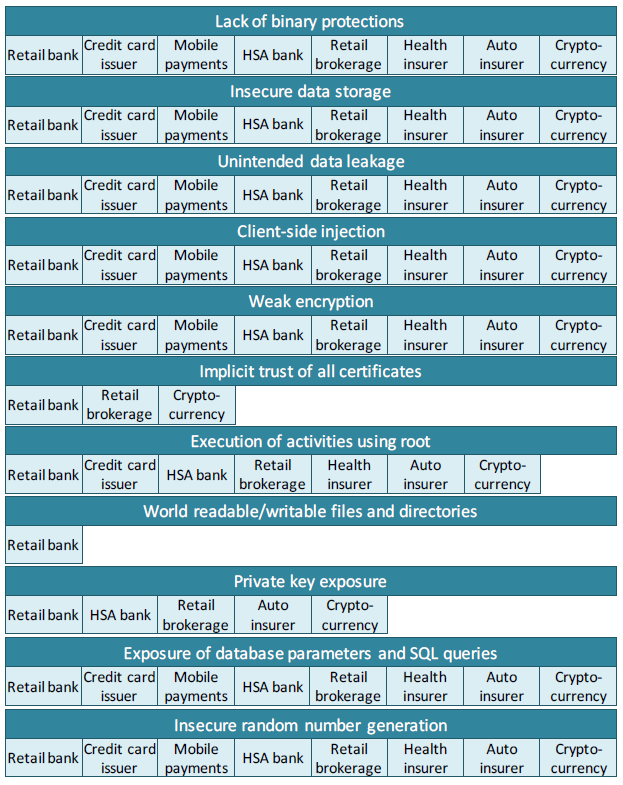

The overall lack of security in financial mobile apps stems from poor or weak app developing practices. According to the study, Arxan found 11 types of vulnerabilities because of this. They are:

- Lack of binary protections. Binary protection is the same as binary hardening or application hardening. It’s the process of making a finished app difficult to tamper with or reverse engineer. Source code obfuscation is a way to harden an app’s security, for example. Unfortunately, the study found that all the financial institution apps they tested had no application security, making it easy for threat actors to decompile the app, find its weaknesses, and create an attack.

- Insecure data storage. Financial mobile apps aren’t particularly good at storing users’ data. They usually store sensitive data in the mobile device’s local or external storage, outside of the sandbox environment, allowing other users to access and exploit it.

- Unintended data leakage. The majority of financial apps share services with other apps on the mobile device, therefore leaving user data accessible to other apps on the device.

- Client-side injection. This high-risk vulnerability, when exploited, allows malicious code to execute on the mobile device via the app itself. This could also allow threat actors to access various functions of the mobile device, adjust trust settings for apps, or, if the owner has put a sandbox in place for added protection, break out of it.

- Weak encryption. An overwhelming number of financial institutions are either using the MD5 encryption algorithm or have implemented a strong cipher incorrectly. This allows for the easy decryption of sensitive data, which threat actors can steal or manipulate.

- Implicit trust of all certificates. Financial apps do not implement checks when presented with web certificates. This makes the app susceptible to man-in-the-middle (MiTM) attacks, especially when fake certificates are involved. Attackers can intercept an exchange between the app and the financial institution, for example, by changing the bank account number from the original owner’s to the criminal’s in the middle of a money transfer transaction without anyone noticing.

- Execution of activities using root. A considerable number of the mobile apps tested could conduct tasks on devices with elevated privileges. Much like an admin to a computer, who has free rein over what he can perform on the machine, criminals are also given similar privileges for the app if compromised. Elevated privileges can grant anyone access to normally-restricted data and the ability to manipulate settings, which are otherwise restricted to normal users.

- World readable/writable files and directories. A fractional number of financial apps allowed for the reading and writing of their files, even when stored in a private data directory. Not only would this cause a level of data leakage, but compromised apps could allow criminals to manipulate said files to change the way the app behaves.

- Private key exposure. Some apps have hard-coded API keys and private certificates either in their code or in one or more of their component files. Since these can be retrieved easily due to the app’s lack of binary protection, attackers could steal and use them to crack encrypted sessions and sensitive data, such as login credentials.

- Exposure of database parameters and SQL queries. As financial apps show readable code when decompiled, attackers with a trained eye could readily know important code bits like sensitive database parameters, SQL queries, and configurations. This allows the attacker to perform SQL injection and database manipulation.

- Insecure random number generator. Apps use a random number generation system for encryption or as part of their function. The better the system, the higher its unpredictability, the stronger the encryption. Most financial apps, however, reply on sub-par generators that makes guessing an easy challenge for attackers.

Small organizations are big on security

When it comes to creating secure financial mobile apps, medium- to large-sized companies could learn a thing or two from smaller organizations. According to the report, “Surprisingly, the smaller companies had the most secure development hygiene, while the larger companies produced the most vulnerable apps.”

Nathan Collier, Senior Malware Intelligence Analyst at Malwarebytes and principal contributor to our Mobile Menace Monday series, felt positive about this finding. “I love that smaller companies that care about their customers did better,” Collier said. “I checked my own credit union’s app, and they seem to be up-to-snuff with most of the things in the report.”

There’s room for improvement

In a recent report from Forbes, researchers found that 25 percent of all malware are targeting financial institutions. Other attacks related to financial services, such as fraud, are also on the uptick.

Given this trend, financial institutions must not only act to protect themselves from direct attacks, but also investigate how they develop the products they offer to clients. Whether apps are made in-house or via third-party, leaving security out of software development and letting programmers continue to write insecure code will cause more harm than good in the end.

Developers do care about security, and vulnerable software is the bane of every business organization. So why not make this an opportunity to innovate and adapt new practices based on the current threat landscape? After all, there’s always room for improvement.