These are trying times for social networks, with endless reports of harassment and abuse not being tackled and many users leaving platforms forever. The major sites such as Facebook and Twitter do what they can, but sheer userbase volume and erroneous automated feedback leave people cold. Bugs such as potentially sharing location data when users enable it alongside other accounts on the same phone are something we’ve come to expect.

Just recently, Twitter and Instagram started trying to filter out what they consider to be erroneous information about vaccines, displaying links to medical information sites amongst search results.

Elsewhere, major portals are trying to establish frameworks for hate speech, or fight the rising tide of trolls and fake news. One of Facebook’s co-founders recently had some harsh words for Zuckerberg, claiming the recent catalogue of issues make a good case for essentially breaking the site up. It’s not that long ago since the so-called Cambridge Analytica scandal came to light, which continues to reverberate about security and privacy circles.

It was always burning

Wherever you look, there’s a whole lot of fire fighting going on and no real solutions on the horizon. In many ways, the sites are too big to fail and the various alternatives that spring up never quite seem to catch on. Mastadon made a huge splash at launch and seems to have a lot more success at tackling abuse than the big guns, but by the

same token, lots of people tried it for a month and never went back. Smaller, decentralised instances with dedicated admins/moderators went a long way toward keeping things usable, but ultimately it seems it was just too niche for people more versed with the familiar sights and sounds of Twitter.

Dogpiling from other regions focused on destabilizing the very platforms being used at any given time only adds fuel to the fire.

To summarise, not the greatest of times being had by social media portals. Bugs will come and go, and sneaky individuals will always try to game systems with spam or political propaganda. Most people would (probably?) agree that abuse is where the bulk of the issues and concerns lie. There’s nothing more frustrating then seeing people hounded by a service they make use of with no tools available to fight it.

The fightback begins

Here’s some of the ways sites are looking to tackle the abuse challenge before them.

1) Twitter has sanitised the way accounts interact for some time. The quality filters make it more difficult to witness drive-by abuse postings sent your way via a few changes of the settings. Assuming you don’t follow people sending you nonsense, then you’ll miss most of the barrage.

No confirmed email address? No confirmed phone number? Sporting a default profile avatar? Brand new accounts? All of these points and more will help keep the bad tweets at bay. Muting is also a lot more reliable than it used to be. A good opening salvo, but still more can be done.

Using this as a starting point, Twitter is now finding ways to combine these outliers of registration with actual user behaviour and the networks in which they operate to weed out bad elements. For example,

“We’re also looking at how accounts are connected to those that violate our rules and how they interact with each other,” Twitter executives wrote in the post. “These signals will now be considered in how we organize and present content in communal areas like conversation and search.”

This would suggest that even if, say, your account ticks enough boxes to avoid the quality filter, you may not escape the algorithm hiding your tweets if it feels you spend a fair portion of time interacting with abusers. Those abusive accounts may also be discreetly hidden from view when browsing popular hashtags in an effort to prevent them from gaming the system.

It seems Twitter needs to balance out hiding abusive messages and clever, sustained trolling versus simply removing content from plain view that one may disagree with but isn’t actually abusive. This will be quite a challenge.

2) Political shenanigans cover the full range of social media sites, coming to prominence in the 2016 US election and beyond. Once large platforms began digging into their data in this realm, they found a non-stop stream of social engineering and manipulation alongside flat out lies. 100,000 political images were shared on open WhatsApp groups in the run-up to the 2018 Brazilian elections, and more than half contained mistruths or lies.

Facebook is commissioning studies into human rights impacts on places like Myanmar due to how the platform is used there. Last year, Oxford University found evidence of high-level political manipulation on social media in 48 countries.

These are serious problems. How are they being addressed long term?

Fix it…or else?

In a word, slowly.

Lawmaker pressure has resulted in some changes at the top. People and organisations wanting to place political ads on Facebook or Google must now supply the identity behind the ad in some regions. This is to combat the dubious tactic known as “dark advertising,” where only the intended target of an ad can see it—usually with zero indication as to who made it in the first place. WhatsApp is cutting down on message forwarding to try and prevent the spread of political misinformation.

Right now, the biggest players are gearing up for the European Parliament Elections—again, with the possible threat of action hanging over them should they fail to do an acceptable job. If they don’t remove bogus accounts quickly enough, if they fail to be rigorous and timely with fact checking and bad article deletion, then regulators could turn up the pressure on the Internet giants.

Canning the spam with a banhammer

It’s not all doom and slow-moving gloom, though. It’s now more common to see platforms making regular public-facing statements about how the war on fakery is going.

Facebook recently made an announcement that they’ve had to remove:

265 Facebook and Instagram accounts, Facebook Pages, Groups and events involved in coordinated inauthentic behavior. This activity originated in Israel and focused on Nigeria, Senegal, Togo, Angola, Niger and Tunisia along with some activity in Latin America and Southeast Asia. The people behind this network used fake accounts to run Pages, disseminate their content and artificially increase engagement. They also represented themselves as locals, including local news organizations, and published allegedly leaked information about politicians.

That’s a significant amount of time sunk into one coordinated campaign. Of course, there are many others and Facebook can only do so much at a time; all the same, this is encouraging. While it may be a case of too little, too late for social media platforms as a whole to start cracking down on abusive patterns now accepted as norms, they’re finally doing something to tackle the rot. All the while, governments are paying close attention.

UKGov steps up to the plate

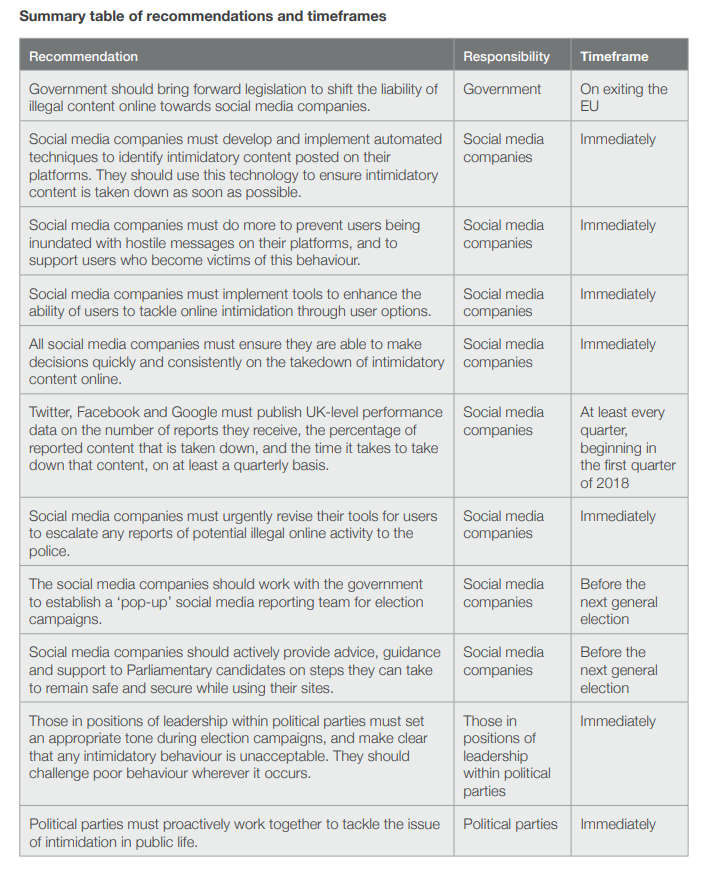

The Jo Cox Foundation will work alongside politicians of all parties to tackle aspects of abuse online, which can lead to catastrophic circumstances. Their paper [PDF format] is released today, and has a particularly lengthy section on social media.

It primarily looks at how people desiring to work in public office are being hammered on all sides by online abuse, and how it then filters down into various online communities. It weighs up the realities of current UK lawmaking…

The posting of death threats, threats of violence, and incitement of racial hatred directed towards anyone (including Parliamentary candidates) on social media is unambiguously illegal. Many other instances of intimidation, incitement to violence and abuse carried out through social media are also likely to be illegal.

…with the reality of the message volume people in public-facing roles are left with:

Some MPs receive an average of 10,000 messages per day

Where do you begin with something like that?

Fix it or else, part 2

Slap bang in the middle of multiple quoted comments from social media sites explaining how they’re tackling online abuse/trolling/political dark money campaigns, we have this:

It is clear to us that the social media companies must take more responsibility for the content posted and shared on their sites. After all, it is these companies which profit from that content. However, it is also clear that those companies cannot and should not be responsible for human pre-moderation of all of the vast amount of content uploaded to their sites.

Doesn’t sound too bad for the social media companies, right? Except they also go on to say this:

Government should bring forward legislation to shift the liability of illegal content online towards social media companies.

Make no mistake, what social media platforms want versus what they’re able to realistically achieve may be at odds with this timetable:

Click to enlarge

The battle lines, then, are set. Companies know there’s a problem, and it’s become too big to hope for some form of self-resolution. Direct, hands-on action and more investment in abuse/reporting methods, along with more employees to handle such reports, are sorely needed. At this point, if social media organisations can’t set this one to rest, then it looks as though someone else may go and do it for them. Depending on how that pans out, we may feel the after effects for some time to come.