The Internet Watch Foundation (IWF), a not-for-profit organization in England whose mission is “to eliminate child sexual abuse imagery online”, has recently released its analysis of online predator victimology and the nature of sexual abuse media that is currently prevalent online. The scope of the report covered the whole of 2020.

IWF annual report: what the numbers reveal

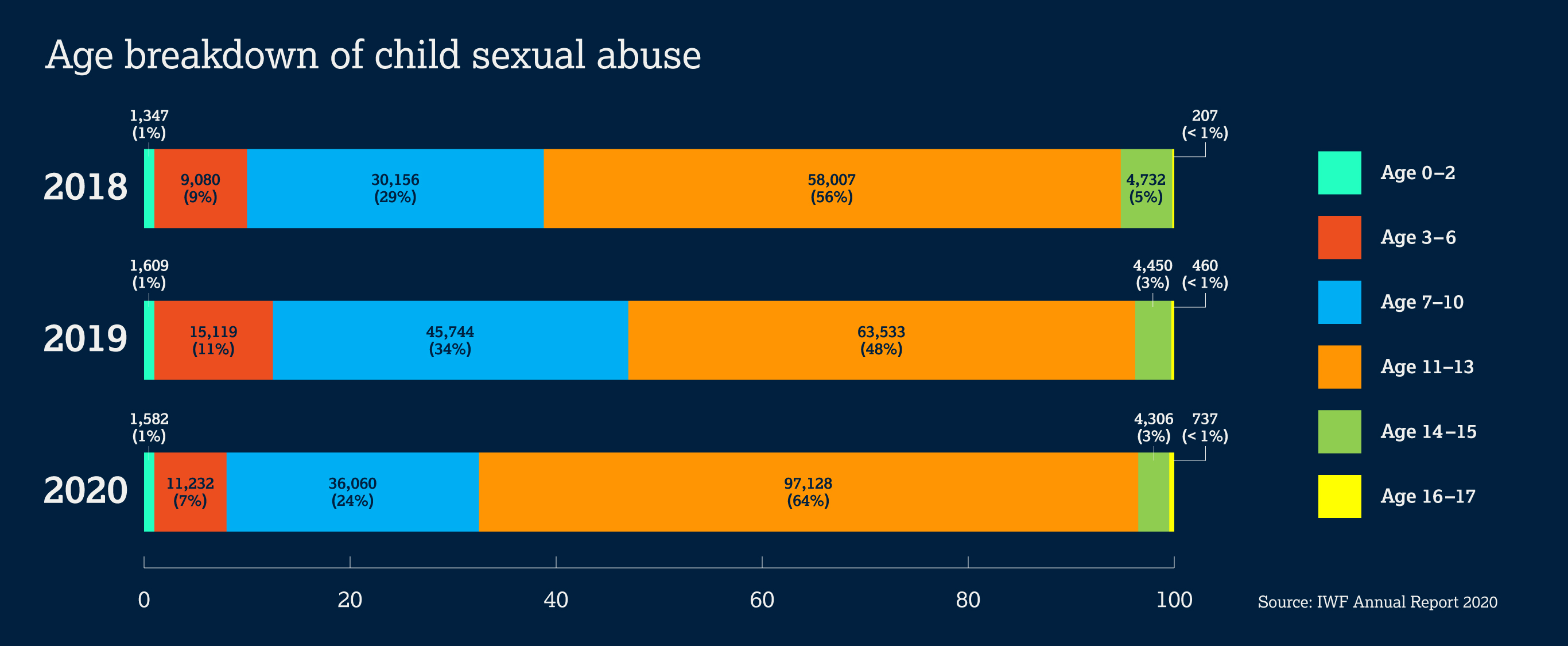

The IWF assessed nearly 300,000 reports in 2020, wherein a little more than half of these—153,383—were confirmed pages containing material depicting child sexual abuse. Compared to their 2019 numbers, there was a 16 percent increase of pages hosting such imagery or being used to share.

From these confirmed reports, the IWF were able to establish the following trends:

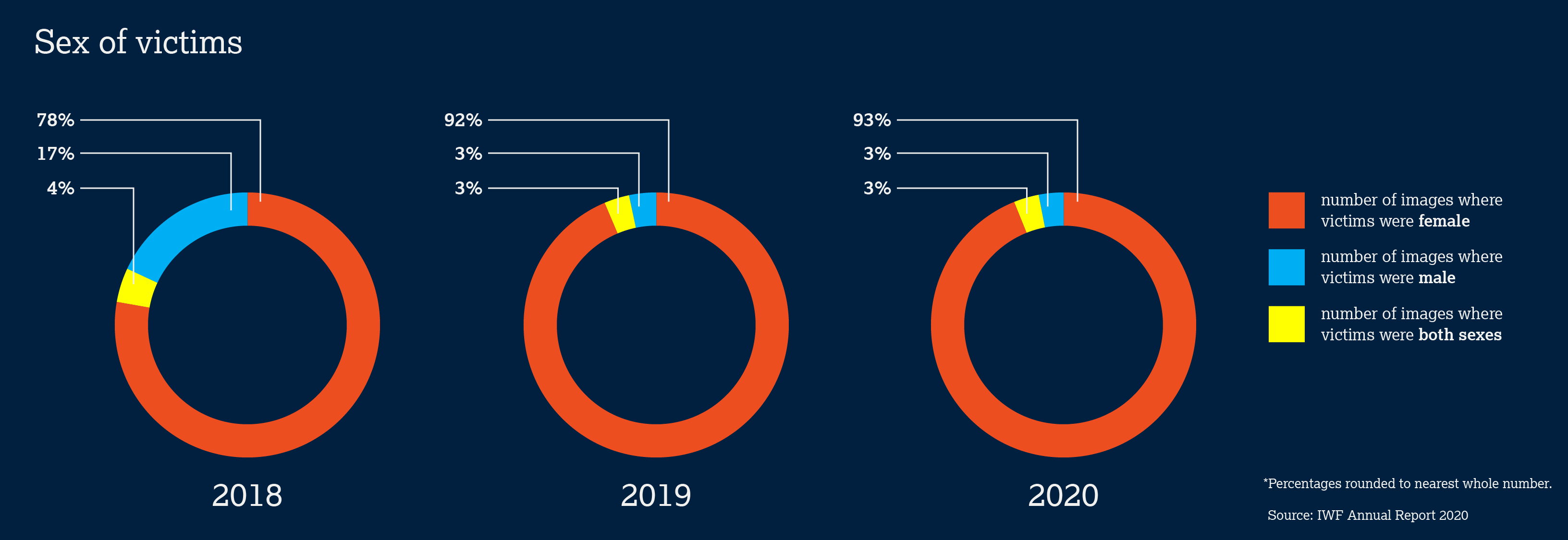

The majority of child victims are female. There has been an increase in the number of female child victims since 2019. In 2020, the IWF has noted that 93 percent of the child sexual abuse material (CSAM) they assessed involved at least one (1) female child. That’s a 15 percent increase compared to numbers in 2019.

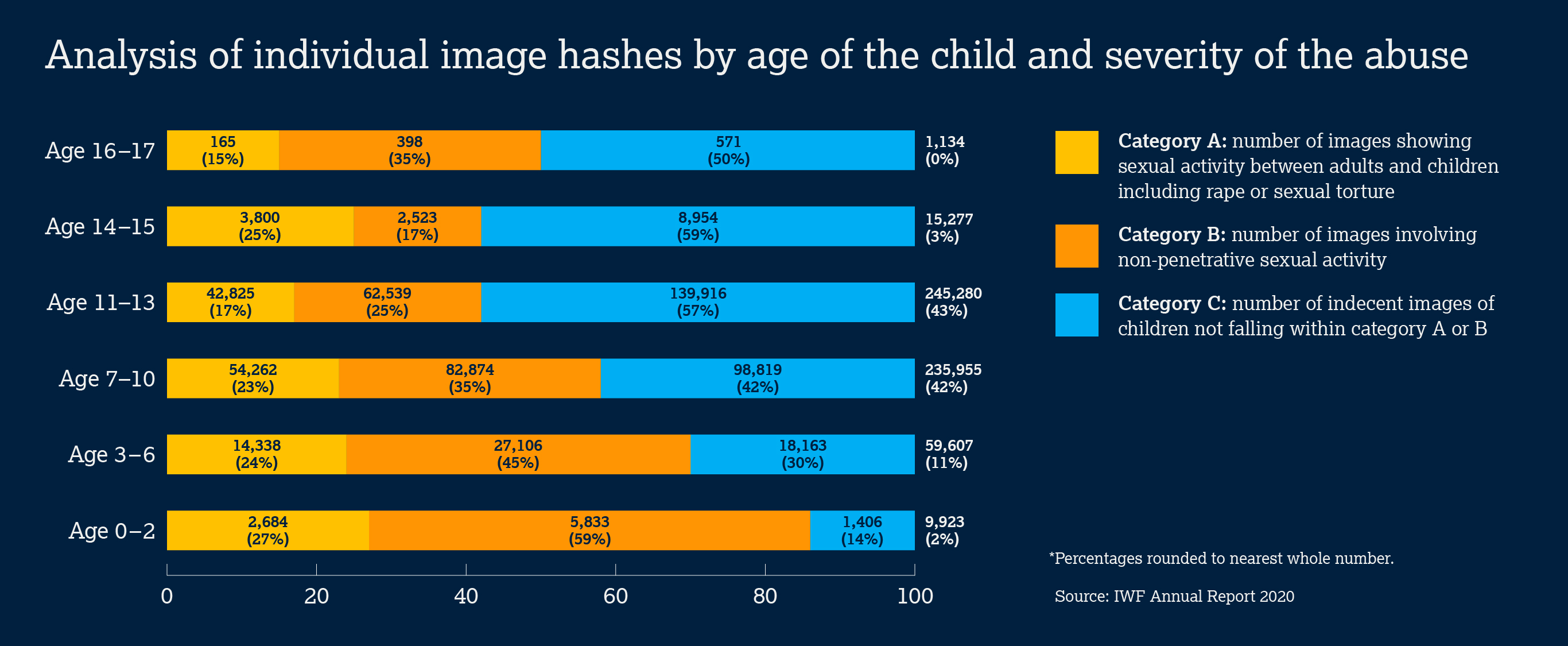

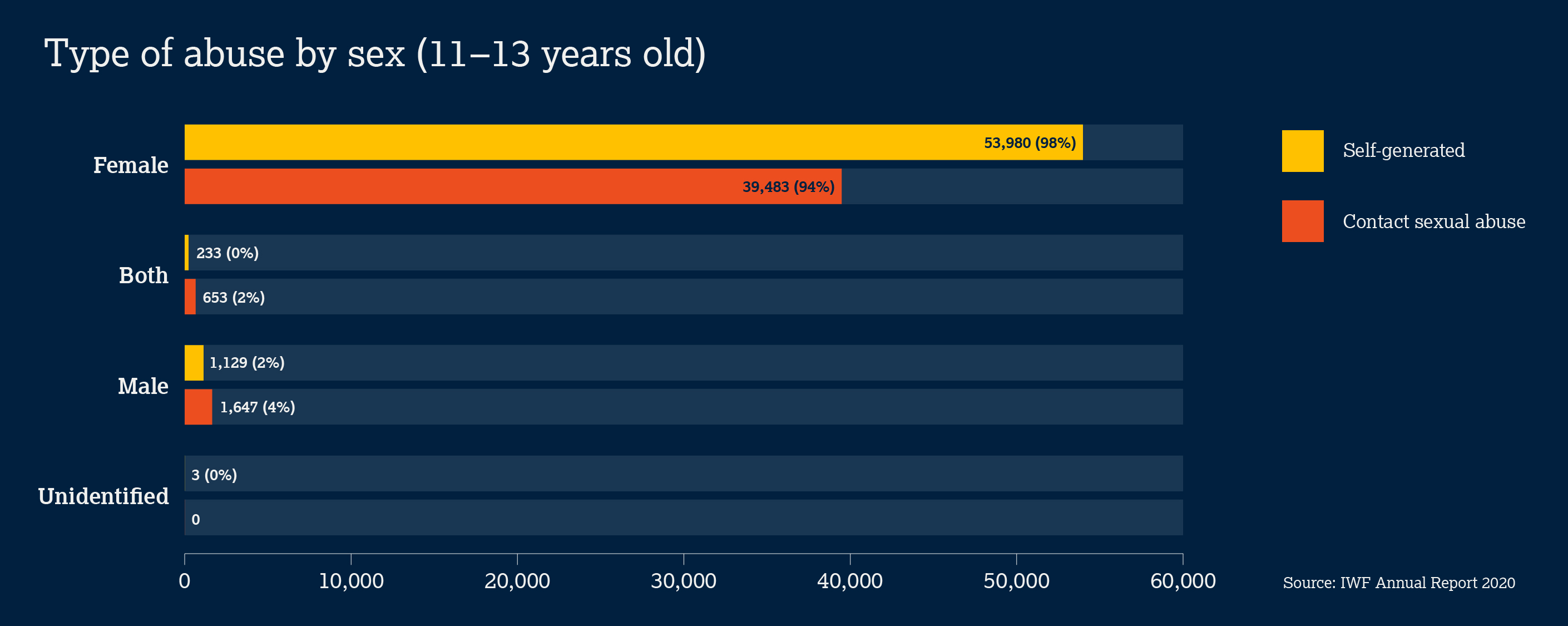

Online predators are after children ages 11-13. The IWF counted a total of 245,280 hashes—unique codes representing different pictures, videos or other CSAM—the majority of which involve females, where a child victim is 11-13 years of age. This is followed by children aged 7 to 10 years of age.

To learn more about the IWF Hash List, watch this YouTube video.

Tink Palmer, CEO of the Marie Collins Foundation, a charity group that helps child victims and their families to recover from sexual abuse involving technology, told the IWF why online predators gravitate within these age groups.

“In many cases it is pre-pubescent children who are being targeted. They are less accomplished in their social, emotional and psychological development. They listen to grown-ups without questioning them, whereas teenagers are more likely to push back against what an adult tells them.”

Self-generated child sexual abuse content are on an uptick. 44 percent of images and videos analyzed by IWF in 2020 are classed as “self-generated” child sexual abuse content. This is a 77 percent increase from 2019 (wherein they received 38,400 reports) to 2020 (wherein they received 68,000 reports).

“Self-generated” content means that the child victims themselves created the media that online predators propagate within and beyond certain internet platforms. Such content is created with the use of either smartphones or webcams, predominantly by 11 to 13 year old girls within their home (usually, their bedroom) and created during periods of COVID-19 lockdowns.

Content concerning the use of webcams are often produced using an online service with a live streaming feature, such as Omegle.

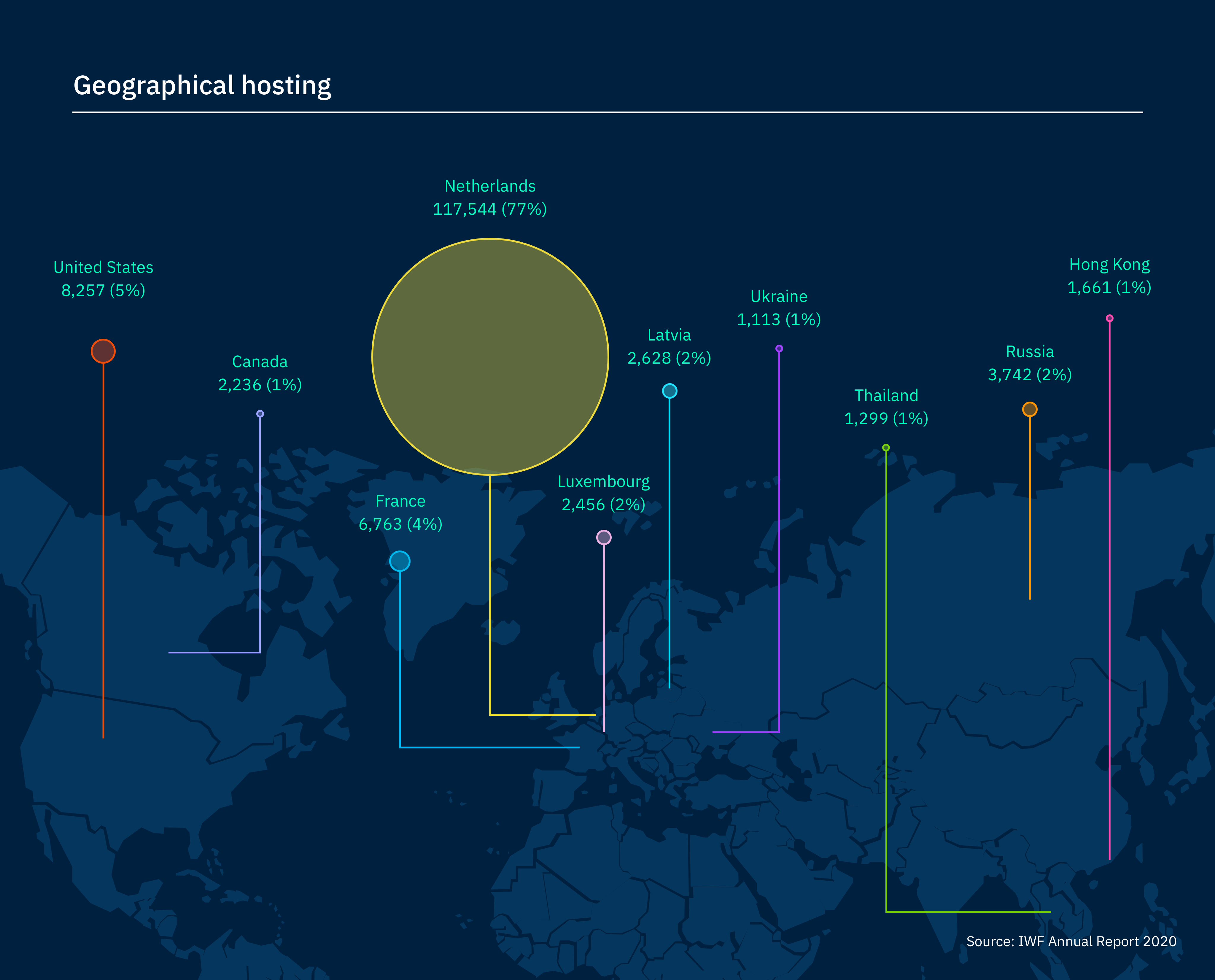

Europe is found hosting almost all child sexual abuse URLs. The IWF has identified that 90% of the URLs it analyzed and confirmed to house CSAM were hosted in Europe, in which they also included Russia and Turkey. Among all countries in Europe, the Netherlands is the prime location for hosting CSAM, a constant that the IWF has seen through the years.

Shutting the door on child sexual abusers

The IWF report highlights a worrying trend on child victimology and gives us an idea that online predators not only groom their targets but also coerce and bully them to do their bidding. And child predators usually frequent platforms that a lot of teenage girls use.

Sadly, there is no single measure or piece of technology that can solve the problem of child exploitation. The best protection for children is effective parenting, and the IWF urges parents and guardians to T.A.L.K. to their children. T.A.L.K. is a list of comprehensive and actionable steps parents and/or carers can take to help guide their children through a safer online journey as they grow up.

T.A.L.K. stands for:

* Talk to your child about online sexual abuse. Start the conversation – and listen to their concerns.

* Agree ground rules about the way you use technology as a family.

* Learn about the platforms and apps your child loves. Take an interest in their online life.

* Know how to use tools, apps and settings that can help to keep your child safe online.

If images or videos of your child have been shared online, it’s important for parents not to blame the child. Instead, reassure them and offer support. Lastly, make a report to the police about these images or videos, IWF, Childline, or your local equivalent.