When it comes to securing your sensitive, personally identifiable information against criminals who can engineer countless ways to snatch it from under your nose, experts have long recommended the use of strong, complex passwords. Using long passphrases with combinations of numbers, letters, and symbols that cannot be easily guessed has been the de facto security guidance for more than 20 years. But does it stand up to scrutiny?

A short and easy-to-remember password is typically preferred by users because of convenience, especially since they average more than 27 different online accounts for which credentials are necessary. However, such a password has low entropy, making it easy to guess or brute force by hackers.

If we factor in the consistent use of a single low-entropy password across all online accounts, despite repeated warnings, then we have a crisis on our hands—especially because remembering 27 unique, complex passwords, PIN codes, and answers to security questions is likely overwhelming for most users.

Instead of faulty and forgettable passwords, tech developers are now pushing to replace them with is something that all human beings have: ourselves.

Bits of ourselves, to be exact. Dear reader, let’s talk biometrics.

Biometrics then and now

Biometrics—or the use of our unique physiological traits to identify and/or verify our identities—has been around for much longer than our computing devices. Handprints, which are found in caves that are thousands of years old, are considered one of the earliest forms of physiological biometric modality. Portuguese historian and explorer João de Barros recorded in his writings that 14th century Chinese merchants used their fingerprints to finalize transaction deals, and that Chinese parents used fingerprints and footprints to differentiate their children from one another.

Hands down, human beings are the best biometric readers—it’s innate in all of us. Studying someone’s facial features, height, weight, or notable body markings, for example, is one of the most basic and earliest means of identifying unfamiliar individuals without knowing or asking for their name. Recognizing familiar faces among a sea of strangers is a form of biometrics, as is meeting new people or determining which person out of a lineup committed a certain crime.

As the population boomed, the process of telling one human being from another became much more challenging. Listing facial features and body markings were no longer enough to accurately track individual identities at the macro level. Therefore, we developed sciences (anthropometry, from which biometrics stems), systems (the Henry Classification System), and technologies to aid us in this nascent pursuit. Biometrics didn’t really become “a thing” until the 1960’s—the same era of the emergence of computer systems.

Today, many biometric modalities are in place for identification, classification, education, and, yes, data protection. These include fingerprints, voice recognition, iris scanning, and facial recognition. Many of us are familiar with these modalities and use them to access our data and devices every day.

Are they the answer to the password problem? Let’s look at some of these biometrics modalities, where they are normally used, how widely adopted and accepted they are, and some of the security and privacy concerns surrounding them.

Fingerprint scanning/recognition

Fingerprint scanning is perhaps the most common, widely-used, and accepted form of biometric modality. Historically, fingerprints—and in some cases, full handprints—were used as a means to denote ownership (as we’ve seen in cave paintings) and to prevent impersonation and the repudiation of contracts (as what Sir William Herschel did when he was part of the Indian Civil Service in the 1850’s).

Initially, only those in law enforcement could collect and use fingerprints to identify or verify individuals. Today, billions of people around the world are carrying a fingerprint scanner as part of their smartphone devices or smart payment cards.

While fingerprint scanning is convenient, easy-to-use, and has fairly high accuracy (with the exception of the elderly, as skin elasticity decreases with age), it can be circumvented—and white hat hackers have proven this time and time again.

When Apple first introduced TouchID, its then-flagship feature on the 2013 iPhone 5S, the Chaos Computer Club (CCC) from Germany bypassed it a day after its reveal. A similar incident happened in 2019, when Samsung debuted the Galaxy S10. Security researchers from Tencent even demonstrated that any fingerprint-locked smartphone can be hacked, whether they’re using capacitive, optical, or ultrasonic technologies.

“We hope that this finally puts to rest the illusions people have about fingerprint biometrics,” said Frank Rieger, spokesperson of the CCC, after the group defeated the TouchID. “It is plain stupid to use something that you can’t change and that you leave everywhere every day as a security token.”

Voice recognition

Otherwise known as speaker recognition or speech recognition, voice recognition is a biometric modality that, at base level, recognizes sound. However, in recognizing sound, this modality must also measure complex physiological components—the physical size, shape, and health of a person’s vocal chords, lips, teeth, tongue, and mouth cavity. In addition, voice recognition tracks behavioral components—the accent, pitch, tone, talking pace, and emotional state of the speaker, to name a few.

Voice recognition is used today in computer operating systems, as well as in mobile and IoT devices for command and search functionality: Siri, Alexa, and other digital assistants fit this profile. There are also software programs and apps, such as translation and transcription services, reading assistance, and educational programs designed with voice recognition, too.

There are currently two variants of voice recognition used today: speaker dependent and speaker independent. Speaker dependent voice recognition requires training on a user’s voice. It needs to be accustomed to the user’s accent and tone before recognizing what was said. This is the type that is used to identify and verify user identities. Banks, tax offices, and other services have bought into the notion of using voice for customers to access their sensitive financial data. The caveat here is that only one person can use this system at a time.

Speech independent voice recognition, on the other hand, doesn’t need training and recognizes input from multiple users. Instead, it is programmed to recognize and act on certain words and phrases. Examples of speaker independent voice recognition technology are the aforementioned virtual assistants, such as Windows’ Cortana, and automated telephone interfaces.

But voice recognition has its downsides, too. While it has improved in accuracy by leaps and bounds over the last 10 years, there are still some issues to solve, especially for women and people of color. Like fingerprint scanning, voice recognition is also susceptible to spoofing. Alternatively, it’s easy to taint the quality of a voice recording with a poor microphone or background noise that may be difficult to avoid.

To prove that using voice to authenticate for account access is an insufficient method, researchers from Salesforce were able to break voice authentication at Black Hat 2018 using voice synthesis, a piece of technology that can creates life-like human voices, and machine learning. They also found that the synthesized voice’s quality only needed to be good enough to do the trick.

“In our case, we only focused on using text-to-speech to bypass voice authentication. So, we really do not care about the quality of our audio,” said John Seymour, one of the researchers. “It could sound like garbage to a human as long as it bypasses the speech APIs.”

All this, and we haven’t even talked about voice deepfakes yet. Imagine fraudsters having the ability to pose as anyone they want using artificial intelligence and a five second recording of their voice. As applicable as voice recognition is as a technology, it’s perhaps the weakest form of biometric identity verification.

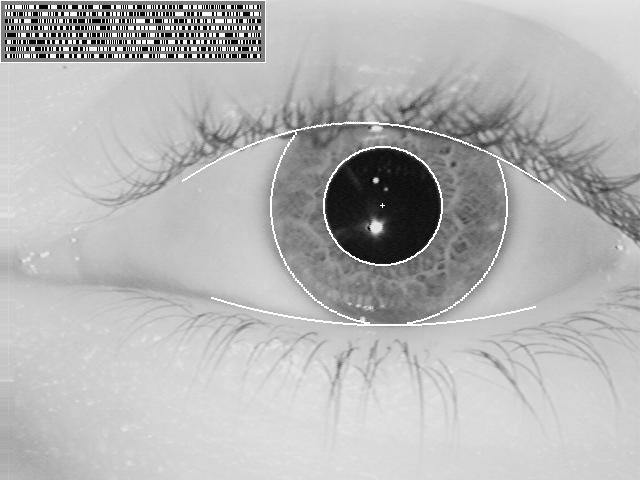

Iris scanning or iris recognition

Advocates of iris scanning claim that iris images are quicker and more reliable than fingerprint scanning as a means of identification, as irises are less likely to be altered or obscured than fingerprints.

Iris scanning is usually conducted with an invisible infrared light that passes over the iris wherein unique patterns and colors are read, analyzed, and digitized for comparison to a database of stored iris templates either for identification or verification.

Unlike fingerprint scanning, which requires a finger to be pressed against a reader, iris scanning can be done both within close range and from afar, as well as standing still and on-the-move. These capabilities raise significant privacy concerns, as individuals and groups of people can be surreptitiously scanned and captured without their knowledge or consent.

There’s an element of security concern with iris scanning as well: Third parties normally store these templates, and we have no idea how iris templates—or all biometric templates—are stored, secured, and shared. Furthermore, scanning the irises of children under 4 years old generally produces scans of inferior quality compared to their adult counterparts.

Iris scanners, especially those that market themselves as airtight or unhackable, haven’t escaped cybercriminals’ radar. In fact, such claims often fuel their motivation to prove the technology wrong. In 2019, eyeDisk, the purported “unhackable USB flash drive,” was hacked by white hat hackers at PenTest Partners. After making a splash breaking Apple’s TouchID in 2013, the CCC hacked Samsung’s “ultra secure” iris scanner for the Galaxy S8 four years later.

“The security risk to the user from iris recognition is even bigger than with fingerprints as we expose our irises a lot,” said Dirk Engling, a CCC spokesperson. “Under some circumstances, a high-resolution picture from the Internet is sufficient to capture an iris.”

Facial recognition

This biometric modality has been all the rage over the last five years. Facial recognition systems analyze images or video of the human face by mapping its features and comparing them against a database of known faces. Facial recognition can be used to grant access to accounts and devices that are typically locked by other means, such as a PIN, password, or other form of biometric. It can be used to tag photos on social media or optimize image search results. And it’s often used in surveillance, whether to prevent retail crime or help police officers identify criminals.

As with iris scanners, a concern of security and privacy advocates is the ability of facial recognition technology to be used in combination with public (or hidden) cameras that don’t require knowledge or consent from users. Combine this with lack of federal regulation, and you once again have an example of technology that has raced far ahead of our ability to define its ethical use. Accuracy is another point of contention, and multiple studies have backed up its imprecision, especially when identifying people of color.

Private corporations, such as Apple, Google, and Facebook have developed facial recognition technology for identification and authentication purposes, while governments and law enforcement implement it in surveillance programs. However, citizens—the target of this technology—have both tentatively embraced facial recognition as a password replacement and rallied against its Big Brother application via government monitoring.

When talking about the use of facial recognition technology for government surveillance, China is perhaps the top country that comes to mind. To date, China has at least 170 million CCTV cameras—and this number is expected to increase by almost threefold by 2021.

With this biometric modality being used at universities, shopping malls, and even public toilets (to prevent people from taking too many tissues), surveys show Chinese citizens are wary of the data being collected. Meanwhile, the facial recognition industry in China has been the target of US sanctions for violations of human rights.

“AI and facial recognition technology are only growing and they can be powerful and helpful tools when used correctly, but can also cause harm with privacy and security issues,” wrote Nicole Martin in Forbes. “Lawmakers will have to balance this and determine when and how facial technology will be utilized and monitor the use, or in some cases abuse, of the technology.”

Behavioral biometrics

Otherwise known as behaviometrics, this modality involves the reading of measurable behavioral patterns for the purpose of recognizing or verifying a person’s identity. Unlike other biometrics mentioned in this article, which are measured in a quick, one-time scan (static biometrics), behavioral biometrics is built around continuous monitoring and verification of traits and micro-habits.

This could mean, for example, that from the time you open your banking app to the time you have finished using it, your identity has been checked and re-checked multiple times, ensuring your bank that you still are who you claim you are for the entire time. The bonus? The process is frictionless, so users don’t realize the analysis is happening in the background.

Private institutions have taken notice of behavioral biometrics—and the technology and systems behind this modality—because it offers a multitude of benefits. It can be tailored according to an organization’s needs. It’s efficient and can produce results in real time. And it’s secure, since biometric data of this kind is difficult to steal or replicate. The data retrieved from users is also highly accurate.

Like any other biometric modality, using behavioral biometrics brings up privacy concerns. However, the data collected by a behavioral biometric application is already being collected by device or network operators, which is recognized by standard privacy laws. Another plus for privacy advocates: Behavioral data is not defined as personally identifiable, although it’s being considered for regulation so that users are not targeted by advertisers.

While voice recognition (which we mentioned above), keystroke dynamics, and signature analysis are all under the umbrella of behavior biometrics, take note that organizations that employ a behavioral biometric scheme do not use these modalities.

Biometrics vs. passwords

At face value, any of the biometric modalities available today might appear to be superior to passwords. After all, one could argue that it’s easy for numeric and alphanumeric passwords to be stolen or hacked. Just look at the number of corporate breaches and millions of affected users bombarded by scams, phishing campaigns, and identity theft. Meanwhile, theft of biometric data has not yet happened at this scale (to our knowledge).

While this argument may have some merit, remember that when a password is compromised, it can be easily replaced with another password, ideally one with higher entropy. However, if biometric data is stolen, it’s impossible for a person to change it. This is, perhaps, the top argument against using biometrics.

Because a number of our physiological traits can be publicly observed, recorded, scanned from afar, or readily taken as we leave them everywhere (fingerprints), it is argued that consumer-grade biometrics—without another form of authentication—are no more secure than passwords.

Not only that, but the likelihood of cybercriminals using such data to steal someone’s identity or to commit fraud will increase significantly over time. Biometric data may not (yet) open new banking accounts under your name, but it can be abused to gain access to devices and establishments that have a record of your biometric. Thanks to new “couch-to-plane” schemes several airports are beginning to adapt, stolen biometrics can now put a fraudster on a plane to any destination they wish to go.

What about DNA as passwords?

Using one’s DNA as password is a concept that is far from far-fetched, although not widely-known or used in practice. In a recent paper, authors Madhusudhan R and Shashidhara R have proposed the use of a DNA-based authentication scheme within mobile environments using a Hyper Elliptic Curve Cryptosystem (HECC), allowing for greater security in exchanging information over a radio link. This is not only practical but can also be implemented on resource-constrained mobile devices, the authors say.

This may sound good on paper, but as the idea is still purely theoretical, privacy-conscious users will likely need a lot more convincing before considering to use their own DNA for verification purposes. While DNA may seem like a cool and complicated way to secure our sensitive information, much like out fingerprints, we leave DNA behind all the time. And, just as we can’t change our fingerprints, our DNA is permanent. Once stolen, we can never use it for verification.

Furthermore, the once promising idea of handing over your DNA to be stored in a giant database in exchange for learning your family’s long-forgotten secrets seems to have lost its charm. This is due to increased awareness among users of the privacy concerns surrounding commercial DNA testing, including how the companies behind them have been known to hand over data to pharmaceutical companies, marketers, and law enforcement. Not to mention, studies have shown that such test results are inaccurate about 40 percent of the time.

With so many concerns, perhaps it’s best to leave the notion of using DNA as your proverbial keys to the kingdom behind and instead focus on improving how you create, use, and store passwords instead.

Passwords (for now) are here to stay

As we have seen, biometrics isn’t the end-all, be-all most of us expected. However, this doesn’t mean biometrics cannot be used to secure what you hold dear. When we do use them, they should be part of a multi-authentication scheme—and not a password replacement.

What does that look like in practice? For top level security that solves the issue of having to remember so many complex passwords, store your account credentials in a password manager. Create a complex, long passphrase as the master password. Then, use multi-factor authentication to verify the master password. This might involve sending a passcode to a second device or email address to be entered into the password manager. Or, if you’re an organization willing to invest in biometrics, use a modality such as voice recognition to speak an authentication phrase.

So, are biometrics here to stay? Definitely. But so are passwords.