Twitter’s recent changes to checkmark verification continue to cause chaos, this time in the realm of potentially dangerous misinformation. A checkmarked account claimed to show images of explosions close to important landmarks like the Pentagon. These images quickly went viral despite being AI generated and containing multiple overt errors for anyone looking at the supposed photographs.

How did this happen?

Until recently, the social media routine when an important news story breaks would be as follows:

- Something happens, and it’s reported on by verified accounts on Twitter

- This news filters out to non-verified accounts

“Verified” accounts are now paid for by anybody who wants to sign up to the $8 a month Twitter Blue service. There’s no real guarantee that a checkmarked video game company, celebrity, or news source is in fact who they claim to be. There have been many instances of this new policy injecting some mayhem into social media already. Fake Nintendo dispensing offensive images and the infamous “Insulin is free” Tweet causing a stock dive spring to mind.

People have taken the “anything goes in checkmark land” approach and are running with it.

What’s happening now is:

- Fake stories are promoted by checkmarked accounts

- Those stories filter out to non-checkmarked accounts

- People in search of facts try to find non-checkmarked (but real) journalists and news agencies while ignoring the checkmarked accounts.

This is made more difficult by changes to how Twitter displays replies, as paid accounts “float” to the top of any conversation. As a result, a situation where a checkmarked account goes viral through a combination of real people, genuine “verified” accounts, and those looking to spread misinformation can potentially result in disaster.

In this case, several checkmarked accounts made claims of explosions near the Pentagon and then the White House. Bellingcat investigators quickly debunked the imagery for what it is: Poorly done, with errors galore.

Despite how odd the images looked, with no people, mashed up railings, and walls that melt into one another, it made no difference. The visibility of the bogus tweets rocketed and soon there was the possibility of a needless terror-attack panic taking place.

Many US Government, law enforcement, and first responder accounts no longer have a checkmark as they declined to pay for Twitter Blue. Thankfully some have the new grey Government badge, and Arlington County Fire Department was able to confirm that there was no explosion.

What’s interesting about this one is that it highlights how you can post terrible, amateur imagery with no attempt to polish it and enough people will still believe it to make it go viral. In this case, it went viral to the extent that the Pentagon Force Protection Agency had to help debunk it. As Bleeping Computer notes, the PFPA isn’t even verified anymore.

There is no easy answer or collection of tips for avoiding this kind of thing on social media. At least, not on Twitter in its current setup. A once valuable source for breaking, potentially critical warnings about dangerous weather or major incidents simply cannot be trusted as it used to be.

The very best you can do is follow the Government or emergency response accounts which sport the grey badge. There are also gold checkmarks for “verified organisations”, but even there problems remain. A fake Disney Junior account was recently granted a gold check mark out of the blue and chaos ensued.

No, South Park is not coming to Disney Junior.

As for the aim of the accounts pushing misinformation, it’s hard to say. Many paid accounts are simply wanting to troll. Others could be part of dedicated dis/misinformation farms, run by individuals or collectives. It’s also common to see accounts go viral with content, and then switch out to something else entirely once enough reach has been gained. It might be about a different topic, or it could be something harmful.

Even outside the realm of paid accounts, misinformation and fakes can flourish. Just recently, Twitter experienced a return of fake NHS nurses, after having experienced a similar wave back in 2020.

Should any of the fake nurse accounts decide to pay $8 a month, they’ll have the same posting power as the profiles pushing fake explosions. Spam is becoming a big problem on publicly posted and private messages:

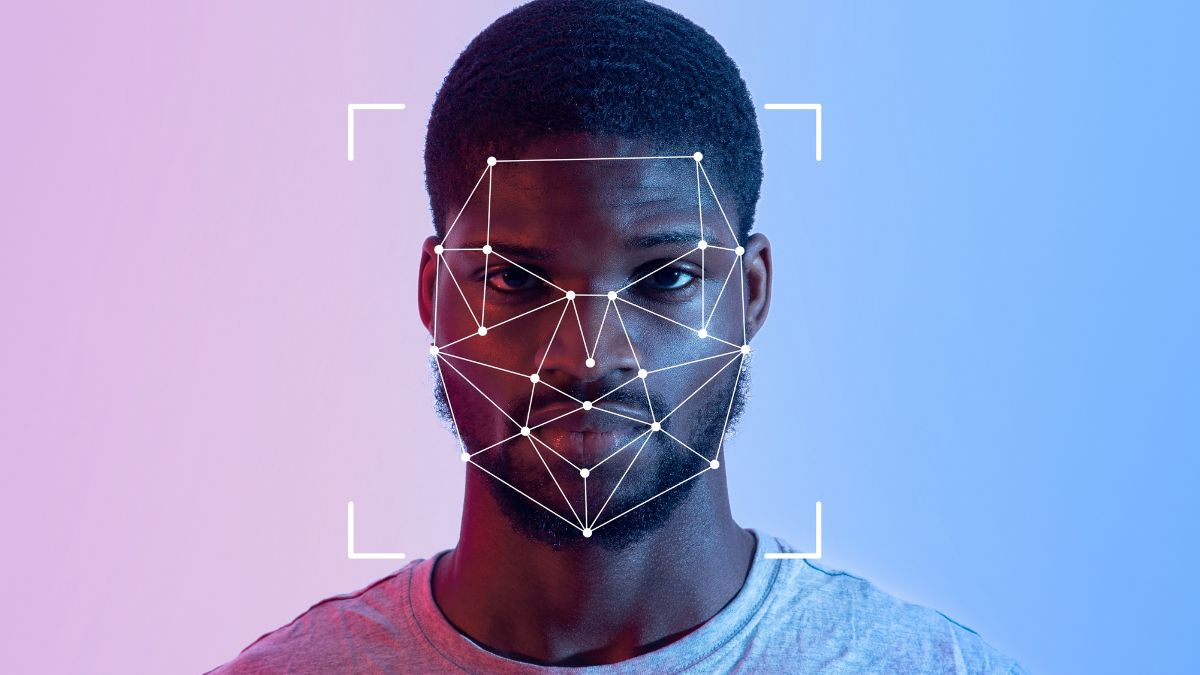

AI is already capable of producing realistic looking images, yet the spammers and scammers are using any old picture without care for how convincing it looks. The combination of “breaking news” messaging and an official looking checkmark easily tips it over the edge, and those liable to fall for it simply don’t examine imagery in detail in the first place. Twitter is going to have to invest some serious time into clamping down on spam and bots which naturally help feed the disinformation waves. The big question is: Can the embattled social media giant do it?

Malwarebytes EDR and MDR removes all remnants of ransomware and prevents you from getting reinfected. Want to learn more about how we can help protect your business? Get a free trial below.