Voice authentication is back in the news with another tale of how easy it might be to compromise. University of Waterloo scientists have discovered a technique which they claim can bypass voice authentication with “up to a 99% success rate after only six tries”. In fact this method is apparently so successful that it is said to evade spoofing countermeasures.

Voice authentication is becoming increasingly popular for crucial services we make use of on a daily basis. It’s a particularly big deal for banking. The absolute last thing we want to see is easily crackable voice authentication, and yet that’s exactly what we have seen.

Back in February, reporter Joseph Cox was able to trick his bank’s voice recognition system with the aid of some recorded speech and a tool to synthesise his responses.

A user typically enrolls into a voice recognition system by repeating phrases, so the system at the other end gets a feel for how their voice sounds. As the Waterloo researchers put it:

When enrolling in voice authentication, you are asked to repeat a certain phrase in your own voice. The system then extracts a unique vocal signature (voiceprint) from this provided phrase and stores it on a server.

For future authentication attempts, you are asked to repeat a different phrase and the features extracted from it are compared to the voiceprint you have saved in the system to determine whether access should be granted.

This is where Cox and his synthesised vocals came into play—his bank’s system couldn’t distingusih between his real voice and a synthesised version of his voice. The response to this was an assortment of countermeasures that involve analysing vocals for bits and pieces of data which could signify the presence of a deepfake.

The Waterloo researchers have taken the game of cat and mouse a step further with their own counter-counermeasure that removes the data characterstic of deepfakes.

From the release:

The Waterloo researchers have developed a method that evades spoofing countermeasures and can fool most voice authentication systems within six attempts. They identified the markers in deepfake audio that betray it is computer-generated, and wrote a program that removes these markers, making it indistinguishable from authentic audio.

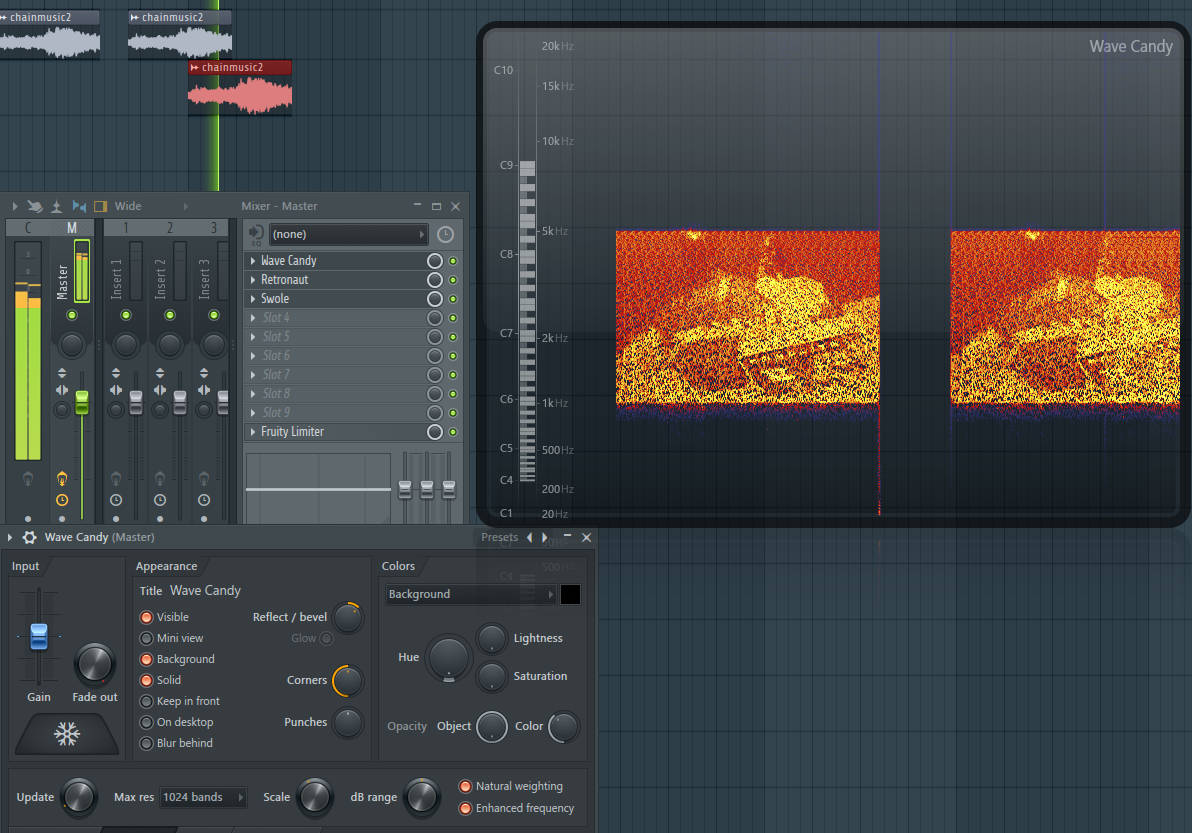

There are many ways to edit a slice of audio, and plenty of ways to see what lurks inside sound files using visualiser tools. Anything that wouldn’t normally be present can be traced, analysed, and altered or made to go away if needed.

As an example, loading up a spectrum analyser (which illustrates the audio signal in visible waves and patterns) may reveal images hidden inside of the sound. Below you can see a hidden image represented by the orange and yellow blocks every time the audio file plays. While the currently discussed research isn’t available outside of paid access, the techniques relied upon to find any deepfake generated cues will likely work along much the same lines. There will be telltale signs of synthetic markers in the sound files, and with these synthetic aspects removed the detection tools will potentially miss the now edited audio because it looks (and more importantly sounds) like the real thing.

It remains to be seen what organisations deploying voice authentication will make of this research. However, you can guarantee whatever they come up with will continue this game of cat and mouse for a long time to come.

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.