Detroit law enforcement wrongly arrested a 32 year old woman for a robbery and carjacking she did not commit. She was detained for 11 hours and had her phone taken as evidence before finally being allowed to leave. The reason for the false arrest is down to a facial recognition error, the kind that privacy and civil liberty organisations have been warning about for some time now.

What makes this one particularly galling is that the surveillance footage used in this case did not show a pregnant woman. Meanwhile, Porsche Woodruff was eight months pregnant at the time of the arrest.

How did this all begin? A Detroit police officer made a facial recognition request on a woman returning the carjacking victim’s phone to a gas station. The facial recognition tool flagged Woodruff via a 2015 mug shot on file from a previous unrelated arrest. Despite being aware that the individual in the footage was not visibly pregnant, the victim was shown a line up which included the old photo. The robbery victim wrongly identified Woodruff as the culprit.

Shortly after, she was arrested for the alleged crime of carjacking and robbery.

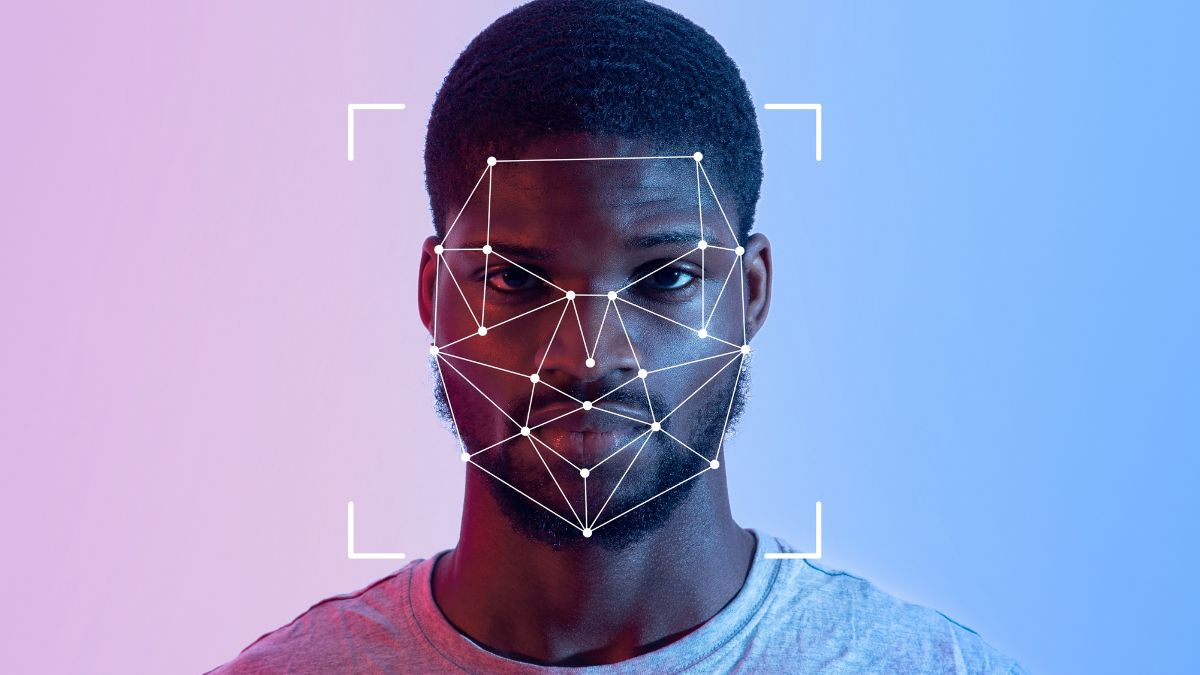

Ars Technica reports that law enforcement used something called DataWorks Plus to match surveillance footage against a criminal mug shot database. DataWorks Plus bills itself as a “facial recognition and case management” technology. It provides “accurate, reliable facial candidates with advanced comparison…tools for investigations”. It also offers up similar services with regard to fingerprints, iris, and tattoo recognition.

Unfortunately for Woodruff, accuracy was on vacation the day her 2015 mug shot was wrongly identified as a match for the robbery in question.

She was charged in court with robbery and carjacking, with all charges dismissed about a month later. She has now filed a lawsuit for wrongful arrest against the city of Detroit which seems quite reasonable given the circumstances.

The New York Times claims that this is the sixth recently reported example of an individual being wrongly accused due to facial recognition technology not working as expected. This is the third such example to have taken place in Detroit, and all 6 wrongly accused individuals are black. A long running concern regarding these technologies is that they tend to perform very badly when dealing with women and people with dark skin. The Ars post has multiple links to various reports and studies highlighting some of these consistent flaws.

Indeed, multiple cities in the US have banned the use of facial recognition technology, though this may be something which may change in the future due to lobbying and “a surge in crime”.

One would think that “you look like this person even though you’re 8 months pregnant and they’re not” would keep this person out of a cell. Is the trust in the supposed accuracy of this technology so great that Detroit police trusted it over the evidence of their own eyes?

They took Woodruff away at her front door, and even used her older photo despite having access to her current driver’s licence photo which was issued in 2021. It does seem very strange that nobody appears to have intervened at the point the technology side of the workflow was going off the rails. From the complaint, via CNN:

When first confronted with the arrest warrant, Woodruff was “baffled and assuming it was a joke, given her visibly pregnant state,” the suit says. She and her fiancé “urged the officers to check the warrant to confirm the female who committed the robbery and carjacking was pregnant, but the officers refused to do so,” the complaint says.

You can go as far back as 2018 to find Detroit law enforcement getting it wrong with facial recognition technology. There, a man was wrongly flagged as a watch thief. In 2019, another individual was briefly accused of stealing a phone until his attorney was able to prove they’d once again accused the wrong individual.

American Civil Liberties Union (ACLU) Michigan is now taking an interest, and the outcome of the lawsuit remains to be seen. While it’s impossible to predict the outcome, Woodruff would appear to have a fairly strong case. The question is, will this result in any meaningful change to how law enforcement incorporates decision making into their technology workflow? Or will we be seeing yet another of these cases six months down the line?

We don’t just report on threats—we remove them

Cybersecurity risks should never spread beyond a headline. Keep threats off your devices by downloading Malwarebytes today.