In the medical world, sharing patient data between organizations and specialists has always been an issue. X-Rays, notes, CT scans, and any other data or related files have always existed and been shared in their physical forms (slides, paperwork).

When a patient needed to take results of a test to another practice for a second opinion or to a specialist for a more detailed look, it would require them to get copies of the documents and physically deliver them to the receiving specialists. Even with the introduction of computers into the equation, this manual delivery in some cases still remains common practice today.

In the medical field, data isn’t stored and accessed in the same way that it is in governments and private businesses. There is no central repository for a doctor to see the history of a patient, as there would be for a police officer accessing the criminal history of a given citizen or vehicle. Because of this, even with the digitization of records, sharing data has remained a problem.

The medical industry has stayed a decade behind the rest of the modern world when it comes to information sharing and technology. Doctors took some of their first steps into the tech world by digitizing images into a format called DICOM. But even with these digital formats, it still was, and sometimes still is, necessary for a patient to bring a CD with data to another specialist for analysis.

Keeping with the tradition of staying 10 years behind, only recently has this digital data been stored and shared in an accessible way. What we see today is individual practices hosting patient medical data on private and often in-house systems called PACS servers. These servers are brought online into the public Internet in order to allow other “trusted parties” to instantly access the data, rather than using the old manual sharing methods.

The problem is, while the medical industry finally joined the 21st century in info-TECH, they still remain a decade behind in info-SEC, resulting in patient’s private data being exposed and ripe for the picking by hackers. This is the issue that we’ll be exploring in this case study.

It’s in the setup

While there are hundreds of examples of exploitable medical devices/ services which have been publicly exposed so far, I will focus in detail on one specific case that deals with a PACS server framework, a system that has great prevalence in the industry and deserves attention because it has the potential to expose private patient data if not set up correctly.

The servers I chose to analyze are built off of a framework called Dicoogle. While the setup of Dicoogle I discovered was insecure, the framework itself is not problematic. In fact, I have respect for the developers, who have done a great job creating a way for the medical world to share data. As with any technology, often times the security comes down to how the individual company decides to implement it. This case is no exception.

Technical details

Let’s start with discovery and access. Generally speaking, anything that exists on the Internet can theoretically be searched for and found. It cannot hide, as far as a server on the Internet is concerned. It is just an IP address, nothing more. So, using Shodan and some Google search terms, it was not difficult to find a live server running Dicoogle in the wild.

The problem begins when we look at its access control. The specific server I reviewed simply allowed access to its front end web panel. There were absolutely no IP or MAC address restrictions. There is good argument to say this database should not have be exposed to the Internet in the first place, rather, it should run on a local network accessible only by VPN. But since security was likely not considered in the setup, I was not required to do any of the more difficult targeted reconnaissance necessary for more secured servers in hopes of finding the front page.

Now, we could give them the benefit of the doubt and say, “Maybe there are just so many people from all over the world legitimately needing access, so they purposely left it open but secured it in other ways.”

After we continue on to look at the remaining OPSEC fails, we can strike this “benefit of the doubt” from our minds. I will make a note that I did happen to come across implementations of Dicoogle that were not susceptible and remained intact. This fact just serves as a confirmation that in this case, we are indeed looking at an implementation error.

Moving on, just as a burglar trying to break into a house will not pull out his lock pick set before simply turning the door handle, we do not need to try any sophisticated hacks if the default credentials still exist in the system being audited.

Sadly, this was the case here. The server had the default creds, which are built into Dicoogle when first installed.

USERNAME: dicoogle

PASSWORD: dicoogle

This type of security fail is all too common throughout any industry.

However, our job is not yet done. I wanted to assess this setup in as many ways as possible to see if there were any other security fails. Default creds is just too lame of a bypass to stop there, and the problem is obviously easy enough to fix. So I began looking into Dicoogle’s developer documentation.

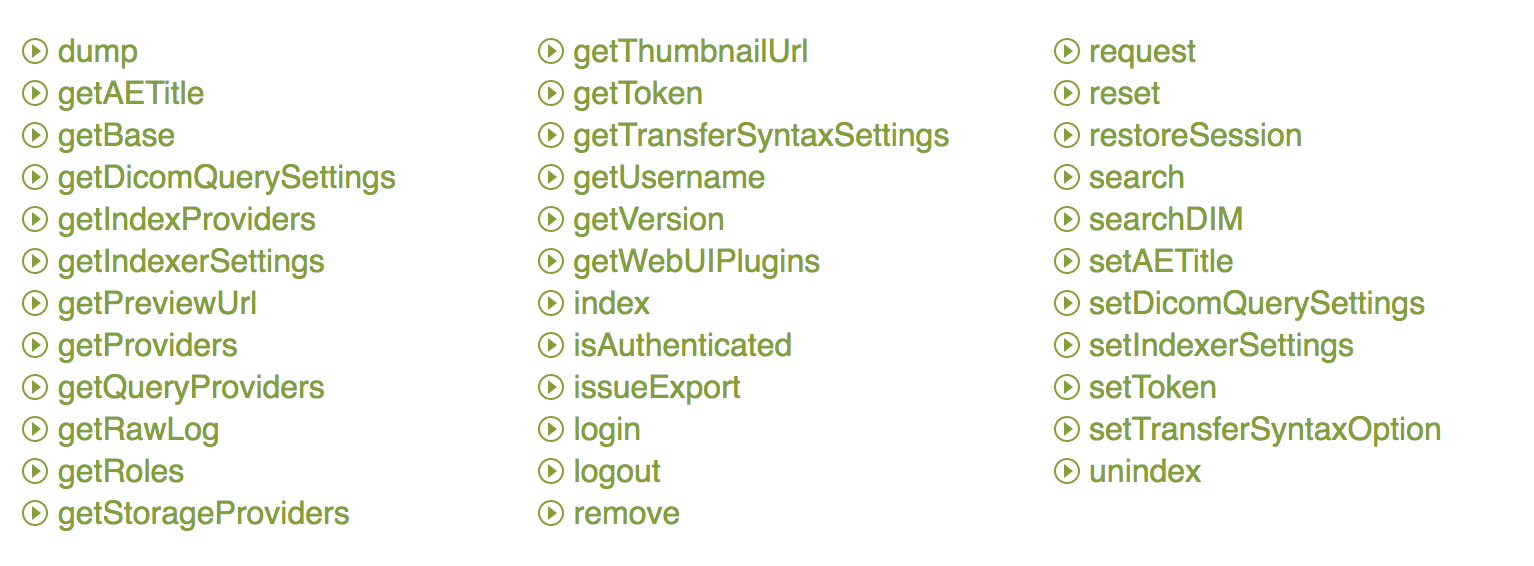

I realized that there are a number of API calls that were created for developers to build custom software interacting with Dicoogle. These APIs are either JavaScript, Python, or REST based. Although there are modules for authentication available for this server, they are not activated by default and require some setup. So, even if this target had removed the default credentials to begin with, they could be easily circumvented because all of the patient data can still be accessed via the API—without any authentication necessary.

This blood is not just on the hands of the team who set up the server, but unfortunately, the blame also lies in part on Dicoogle. When you develop software, especially one that is almost guaranteed to contain sensitive data, security should be implemented by design, and should not require the user to take additional actions. That being said, the majority of the blame belongs to host of this service, as they are the ones who are handling clients’ sensitive data.

Getting into a bit of detail now, you can use any of the following commands via programming or REST API to access this data and circumvent authentication.

[SERVER_IP]?query=StudyDate:[20141101 TO 20141103]

Using the resuilts from this query, the attacker can obtain individual user ID’s, the performing the following call:

/dump?uid=[retreivedID]

All of the internal data and meta data from the DICOM image can be pulled.

We can access all information contained within the databases using a combination of these API calls, again, without needing any authentication.

Black market data

“So whats the big deal?” you might ask. “This data does not contain a credit card and sometimes not even a social security number.” We have seen that on the black market, medical data is much more valuable to criminals than a credit card, or even a social security number alone. We have seen listings that show medical data selling for sometimes 10 times what a credit card can go for.

So why is this type of info so valuable to criminals? What harm can criminals do with a breach of this system?

For starters, a complete patient file will contain everything from SSN to addresses, phone numbers, and all related data, making it a complete package for identity theft. These databases contain full patient data and can easily be turned around and sold on the black market. Selling to another criminal may be less money, but it is easier money. Now, aside from basic ID theft and resale, let’s talk about some more targeted and interesting use cases.

The most simple case: vandalism and ransom. In this specific case, since the hacker has access into the portal, deleting and holding this data for ransom is definitely a possibility.

The next potential crime is more interesting and could be a lot more lucrative for criminals. As I have described in this article, medical records are stored in silos, and it is not possible for one medical professional to cross check patient data with any kind of central database. So, two scenarios emerge.

Number one is modification of patient data for tax fraud. A criminal could take individual patient records, complete with CT scan images or X-Rays, and, using freely-available DICOM image editors and related software, modify legitimate patient files to contain imposter information. When the imposter takes a CD to a doctor to become a new patient, the doctor will be none the wiser. So it becomes quite feasible for the imposter to now claim medicare benefits or some kind of tax refunds based on this disease, which they do not actually have.

Number two is even more extreme and lucrative. There have been documented cases where criminals create fake clinics, and submit this legitimate but stolen data to their own fake clinic on behalf of the compromised patient, unbeknownst to them. They then can receive the medical payouts from insurance companies without actually having a patient to work on.

Takeaways

There are three major takeaways from this research. The first is for the client of a medical clinic. Being that we have so much known and proven insecurity in the medical world, as a patient who is concerned about their identity being stolen, it may be wise to ask about how your data is being stored when you take it to any medical facility. If they do not have details on how your data is being safely stored, you are probably better off asking that your data be given to you the old fashioned way: as a CD. Although this may be inconvenient in some ways, at least it will keep your identity safe.

The second takeaway is for medical clinics or practices. If you are not prepared to invest the time and money into proper security, it is irresponsible for you to offer this type of storage. Either stick to the old school patient data methods or spend the time making sure you keep your patients’ identities safe.

At the bare minimum, if you insist on rolling out your own service, keep it local to your organization and allow access only to pre-defined machines. A username and password is not enough of a security measure, let alone the default one. Alternatively, if you do not have the technical staff to properly implement PACS servers, it is best to pay for a reputable cloud-based service who has a good record and documented security practices. You should not jump into the modern information world if you are not prepared to understand the necessary constraints that go along with it.

And finally, the last takeaway is for the developers. There have been enough examples over the last five years to prove that users either do not know or care enough about security. Part of this responsibility lies on you to create software that does not have the potential to be easily abused or put users in danger.