Life must be hard for companies that try to make a living by invading people’s privacy. You almost feel sorry for them. Except I don’t.

The UK’s Information Commissioner’s Office (ICO)—an independent body set up to uphold information rights—has announced its provisional intent to impose a potential fine of just over £17 million (roughly US$23 million) on Clearview AI.

In addition, the ICO has issued a provisional notice to stop further processing of the personal data of people in the UK and delete what ClearviewAI has, following alleged serious breaches of the UK’s data protection laws.

What is Clearview AI?

Clearview AI was founded in 2017, and started to make waves when it turned out to have created a groundbreaking facial recognition app. You could take a picture of a person, upload it and get to see public photos of that person, along with links to where those photos appeared.

According to its own website, Clearview AI provides a “revolutionary intelligence platform”, powered by facial recognition technology. The platform includes a facial network of 10+ billion facial images scraped from the public internet, including news media, mugshot websites, public social media, and other open sources.

Yes, scraped from social media, which means that if you’re on Facebook, Twitter, Instagram or similar, then your face may well be in the database.

Clearview AI says it uses its faceprint database to help law enforcement fight crimes. Unfortunately it’s not just law enforcement. Journalists uncovered that Clearview AI also licensed the app to at least a handful of private companies for security purposes.

Clearview AI ran a free trial with several law enforcement agencies in the UK, but these trials have since been terminated, so there seems to be little reason for Clearview to hold on to the data.

And worried citizens that wish to have their data removed, which companies have to do upon request under the GDPR, are often required to provide the company with even more data, including photographs, to be considered for removal.

It’s not just the UK that’s worried. Earlier this month, the Office of the Australian Information Commissioner (OAIC) ordered Clearview AI to stop collecting photos taken in Australia and remove the ones already in its collection.

Offenses

The ICO says that the images in Clearview AI’s database are likely to include the data of a substantial number of people from the UK and these may have been gathered without people’s knowledge from publicly available information online.

The ICO found that Clearview AI has failed to comply with UK data protection laws in several ways, including:

- Failing to process the information of people in the UK in a way they are likely to expect or that is fair

- Failing to have a process in place to stop the data being retained indefinitely

- Failing to have a lawful reason for collecting the information

- Failing to meet the higher data protection standards required for biometric data (classed as ‘special category data’ under the GDPR and UK GDPR)

- Failing to inform people in the UK about what is happening to their data

- And, as mentioned earlier, asking for additional personal information, including photos, which may have acted as a disincentive to individuals who wish to object to their data being processed

Clearview AI Inc now has the opportunity to make representations in respect of these alleged breaches set out in the Commissioner’s Notice of Intent and Preliminary Enforcement Notice. These representations will then be considered and a final decision will be made.

As a result, the proposed fine and preliminary enforcement notice may be subject to change or there will be no further formal action.

There is some hope for Clearview AI if you look at past fines imposed by the ICO.

Marriot was initially expected to receive a fine of 110 million Euros after a data breach that happened in 2014 but wasn’t disclosed until 2018, but Marriot ended up having to pay “only” 20 million Euros. We can expect to hear a final decision against Clearview AI by mid-2022.

Facial recognition

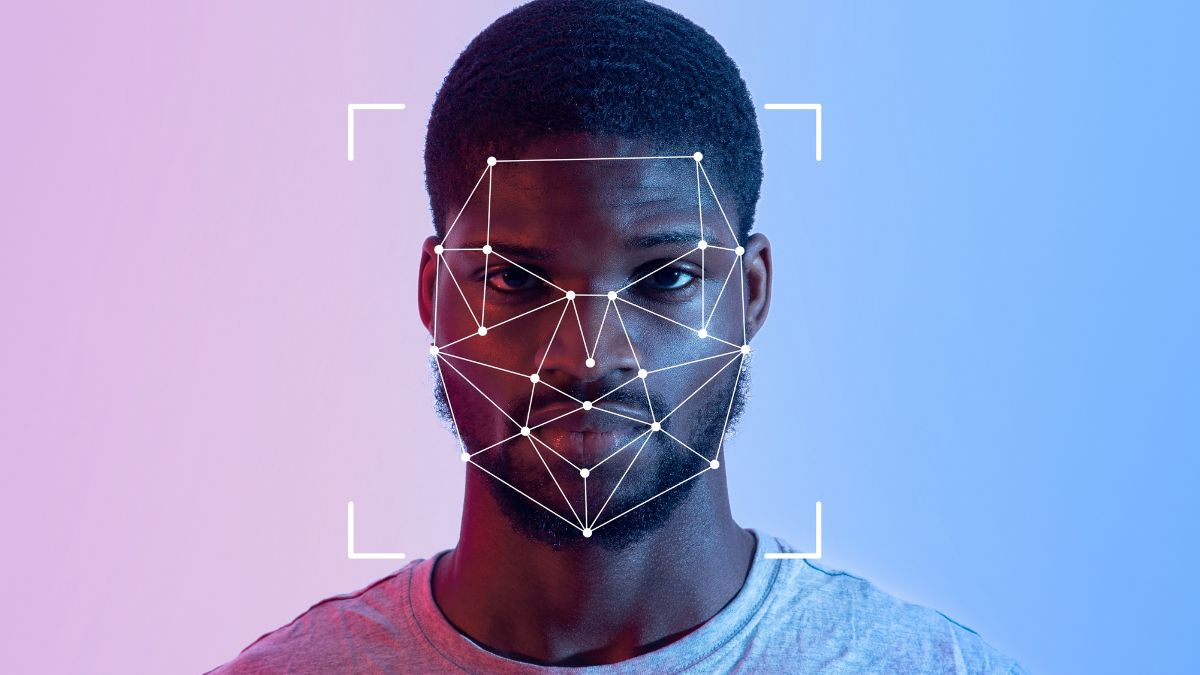

A facial recognition system is a technology capable of matching a human face from a digital image or a video frame against a database of faces, typically employed to identify and/or authenticate users.

Facial recognition technology has always been controversial. It makes people nervous about Big Brother. It has a tendency to deliver false matches for certain groups, like people of color. Police departments have had access to facial recognition tools for almost 20 years, but they have historically been limited to searching government-provided images, such as mugshots and driver’s license photos.

Gathering images from the public internet obviously makes for a much larger dataset, but it’s not the intention with which the images were posted.

It’s because of the privacy implications that some tech giants have backed away from the technology, or halted their development. Clearview AI clearly is not one of them. Neither a tech giant nor a company that cares about privacy.